DATE_TRUNC: A SQL Timestamp Function You Can Count On

Saul Jackman, Analyst

October 6, 2015•Updated on July 19, 2022

NaN minute read

Learn about ThoughtSpot + Mode!

See firsthand in our webinar how we're building a more collaborative, flexible, and accessible future of BI together with ThoughtSpot.

In a world of ever-expanding data streams, we rely on timestamps to organize data down to the millisecond.

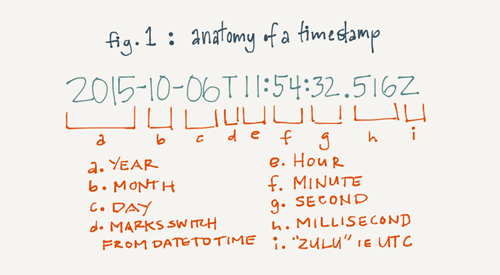

What is a time stamp?

A time stamp is a digital record of when an event has taken place. Timestamps are data types that contain both time and data parts. They include a ton of information: year, month, day, hour, minute, second, millisecond, and zulu.

They look something like this:

Pretty ugly, right?

This level of detail can be distracting.

Suppose you want to explore trends in user signups. You'll need to aggregate signup event data by the time each event occurred. Event timestamps can help, but you probably don’t need all the information they contain. Maybe you're interested in signups by year, month, or day but signups by hour, minute, or millisecond would be overkill.

That's where the DATE_TRUNC() function comes in handy. You can use it to round a timestamp to the interval you need.

Join Mode's Live Weekly Demo

Join a group session for an introductory demo of the Mode Platform. We’ll walk through key features and workflows.

What is DATE_TRUNC()?

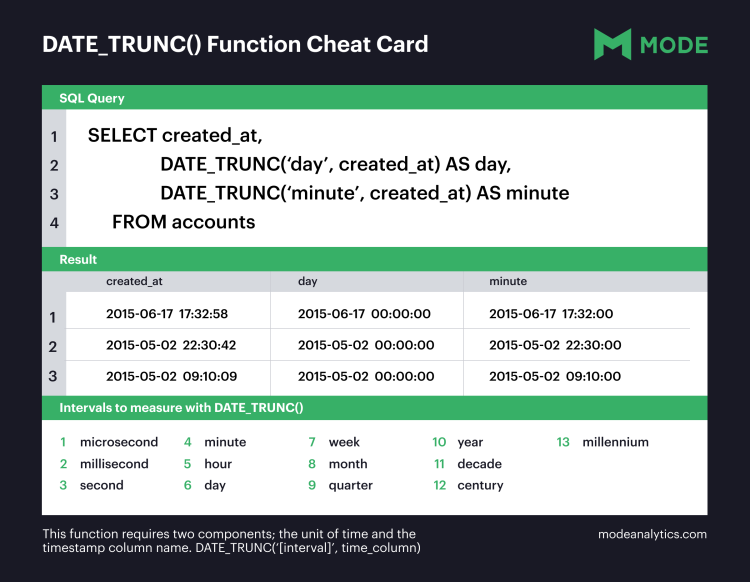

DATE_TRUNC() is a function used to round or truncate a timestamp to the interval you need. When used to aggregate data, it allows you to find time-based trends like daily purchases or messages per second.

How to use DATE_TRUNC() in SQL

To remove the unwanted detail of a timestamp, feed it into the DATE_TRUNC() function. The date_trunc function shortens timestamps so they are easier to read.

Syntax

DATE_TRUNC(‘[interval]’, time_column)

The time_column is the database column that contains the timestamp you'd like to round, and [interval] dictates your desired precision level. You can round off a timestamp to one of these units of time:

microsecond

millisecond

second

minute

hour

day

week

month

quarter

year

decade

century

millenium

If the above timestamp were rounded down to 'day', the result is:

2015-10-06T00:00:00.000Z

If it were rounded down to 'minute', it would look like this:

2015-10-06T11:54:00.000Z

Likewise, ‘second’ rounds down to the nearest second, 'hour' down to the nearest hour, and so on. 'week' rounds down to that Monday's date.

How to create a time series with truncated timestamps

DATE_TRUNC() is particularly useful when you want to aggregate information over an interval of time. Using one of the mock datasets from Mode's SQL School, let's explore time-related trends in user signups as an example use case.

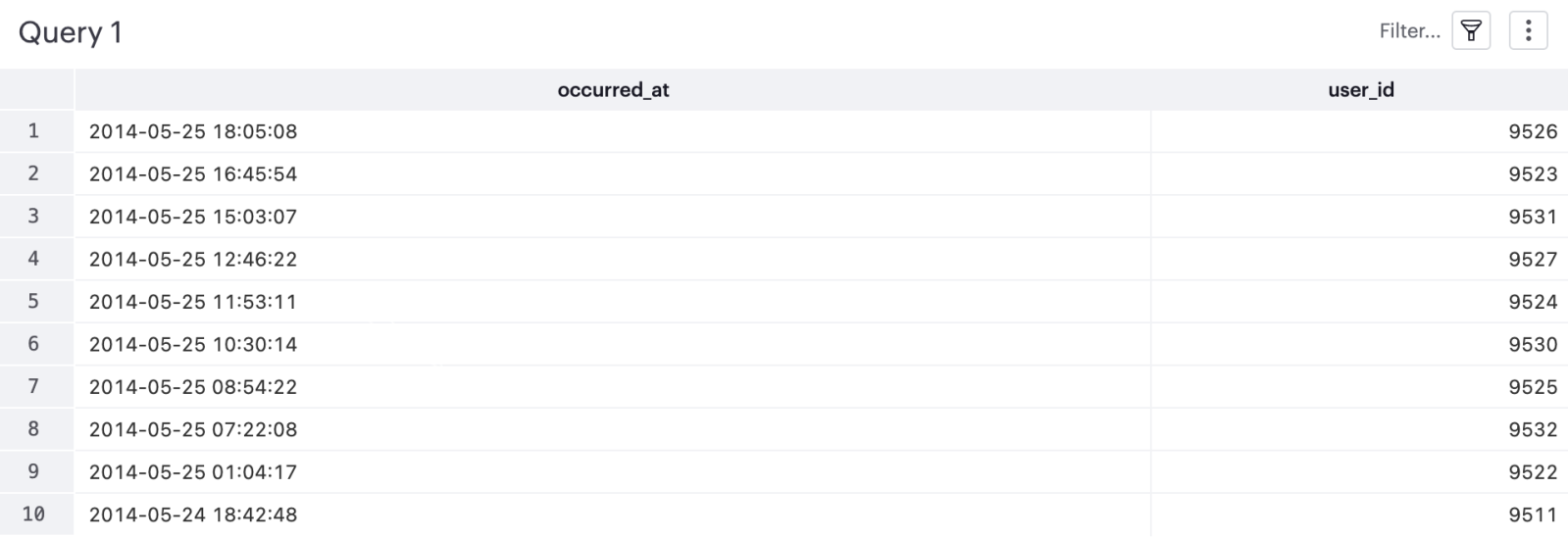

Let's start with this query:

SELECT occurred_at,

user_id

FROM benn.fake_fact_events

WHERE event_name = 'complete_signup'

AND occurred_at >= '2014-03-10'

AND occurred_at <= '2014-05-26'

ORDER BY 1 DESCWhich results in this table that shows the time and user ids of users who completed sign-ups between 3/1/14 and 5/26/14:

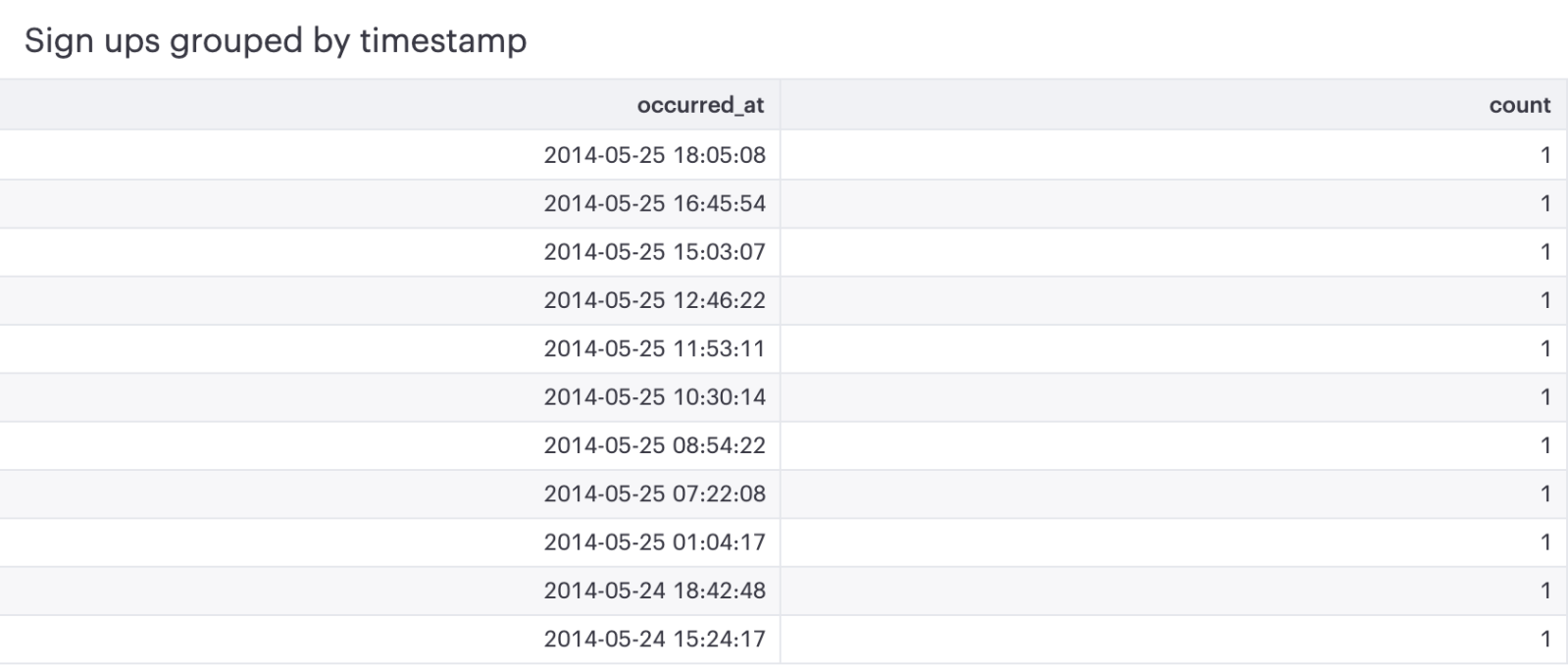

As you might guess, this data doesn't aggregate in a helpful way with the hour, minute, and millisecond data. Here’s what the query looks like when you try to group signups together by timestamp:

SELECT occurred_at,

COUNT(user_id)

FROM benn.fake_fact_events

WHERE event_name = 'complete_signup'

AND occurred_at >= '2014-03-10'

AND occurred_at <= '2014-05-26'

GROUP BY 1

ORDER BY 1 DESCAnd the results:

Not much different, right? This still isn’t helpful because it doest let us group in a useful way. These timestamps still contain too much information to shed light on any meaningful trends. Let's instead roll up the occurred_at events by the day they occurred:

DATE_TRUNC('day', occurred_at)

Here's the full query:

SELECT DATE_TRUNC('day', occurred_at) AS day,

user_id

FROM benn.fake_fact_events

WHERE event_name = 'complete_signup'

AND occurred_at >= '2014-03-10'

AND occurred_at <= '2014-05-26'

ORDER BY 1 DESCAnd the results:

Once timestamps are truncated, you can pull values by time interval

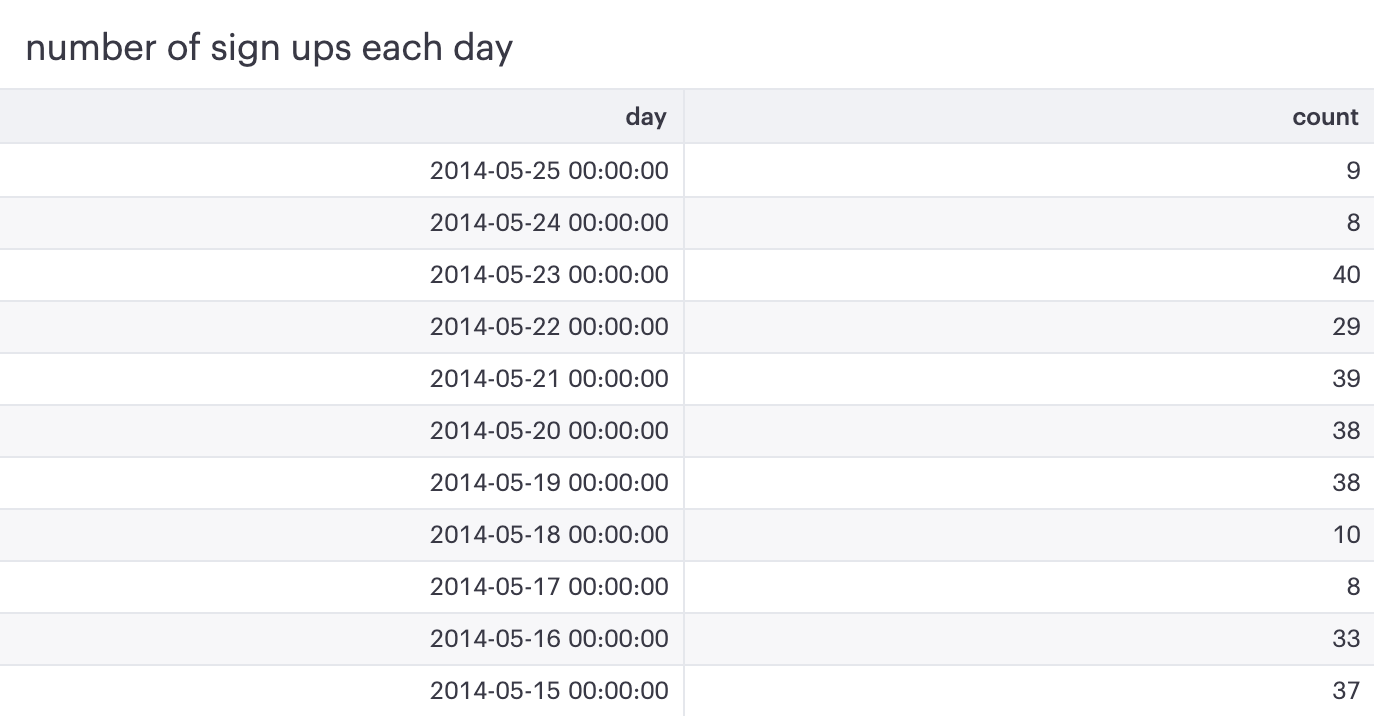

Using the truncated timestamps as keys and aggregating over rows with the same key, you can pool values by time interval. For example, you can count the number of signups that occurred on each day. Here's the query:

SELECT DATE_TRUNC('day', occurred_at) AS day,

COUNT(user_id)

FROM benn.fake_fact_events

WHERE event_name = 'complete_signup'

AND occurred_at >= '2014-03-10'

AND occurred_at <= '2014-05-26'

GROUP BY 1

ORDER BY 1 DESCHere's what you'll get:

These results can then be visualized as a daily time series:

It quickly becomes clear that signups dip every weekend. While this highlights the weekly cycle though, it's still hard to pin down the pattern week over week. Have signups increased over the last few months? Have there been any noticeable dips?

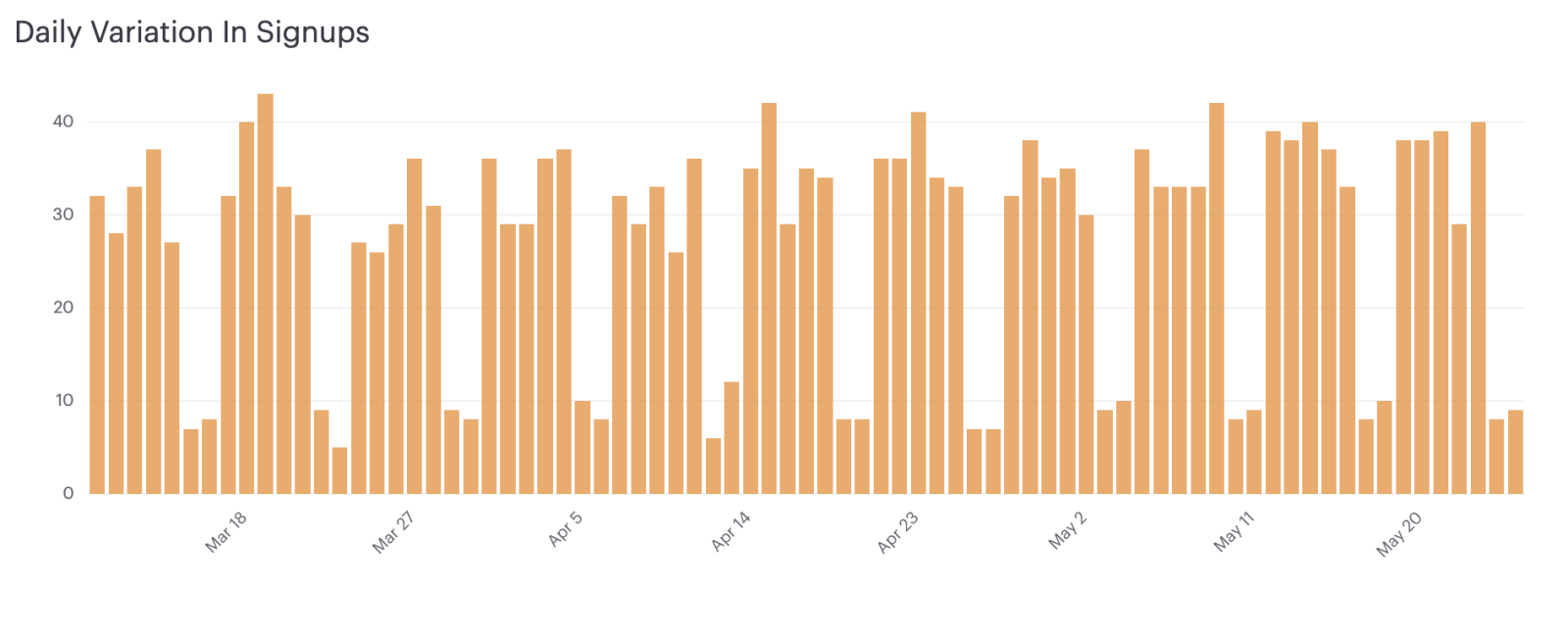

To get at this, we simply switch the level of aggregation from ‘day’ to ‘week’:

SELECT DATE_TRUNC('week', occurred_at) AS week,

COUNT(user_id)

FROM benn.fake_fact_events

WHERE event_name = 'complete_signup'

AND occurred_at >= '2014-03-10'

AND occurred_at <= '2014-05-26'

GROUP BY 1

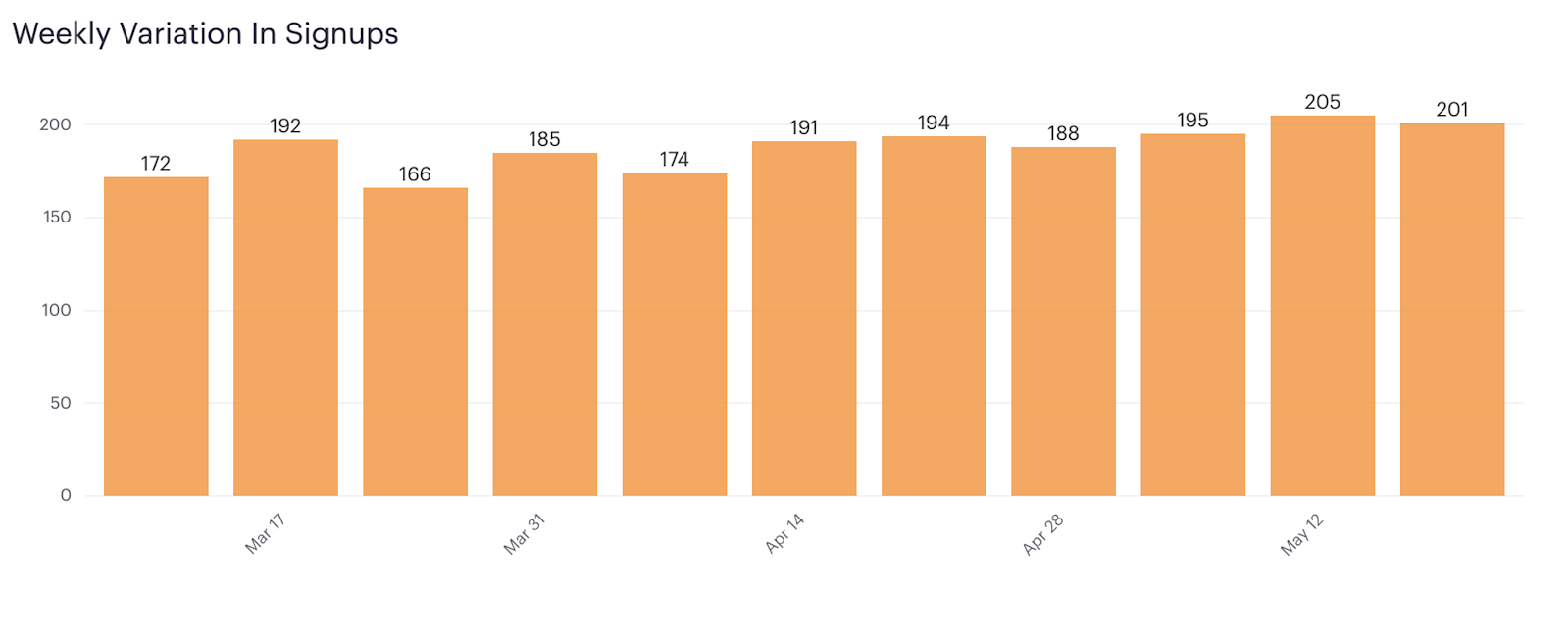

ORDER BY 1 DESCWe can now visualize the data as a weekly time series:

With the noise of the in-week fluctuations removed, we can now see that signups have held mostly stable, with a slight dip in late March that has since rebounded.

As you can see, the DATE_TRUNC() function can be tremendously useful for aggregating time-based data. Apply it to your data in Mode Studio, our freemium version.

Understanding Salesforce CRM Data: A Complete Guide for Analysts

You've just been handed your company's raw Salesforce data. What do you do first? Welcome to your crash course in understanding Salesforce and its data.

Watch a product tour of Mode

Curious about how Mode works? Sit back and watch the video—no reps ;)

Recommended articles

Get our weekly data newsletter

Work-related distractions for data enthusiasts.